Building at the Speed of Trust: The Delicate Dance Between AI and Community

Published in ATÖLYE Insights · 8 min read · July 30, 2025

Coauthors: Engin Ayaz, Melissa Clissold

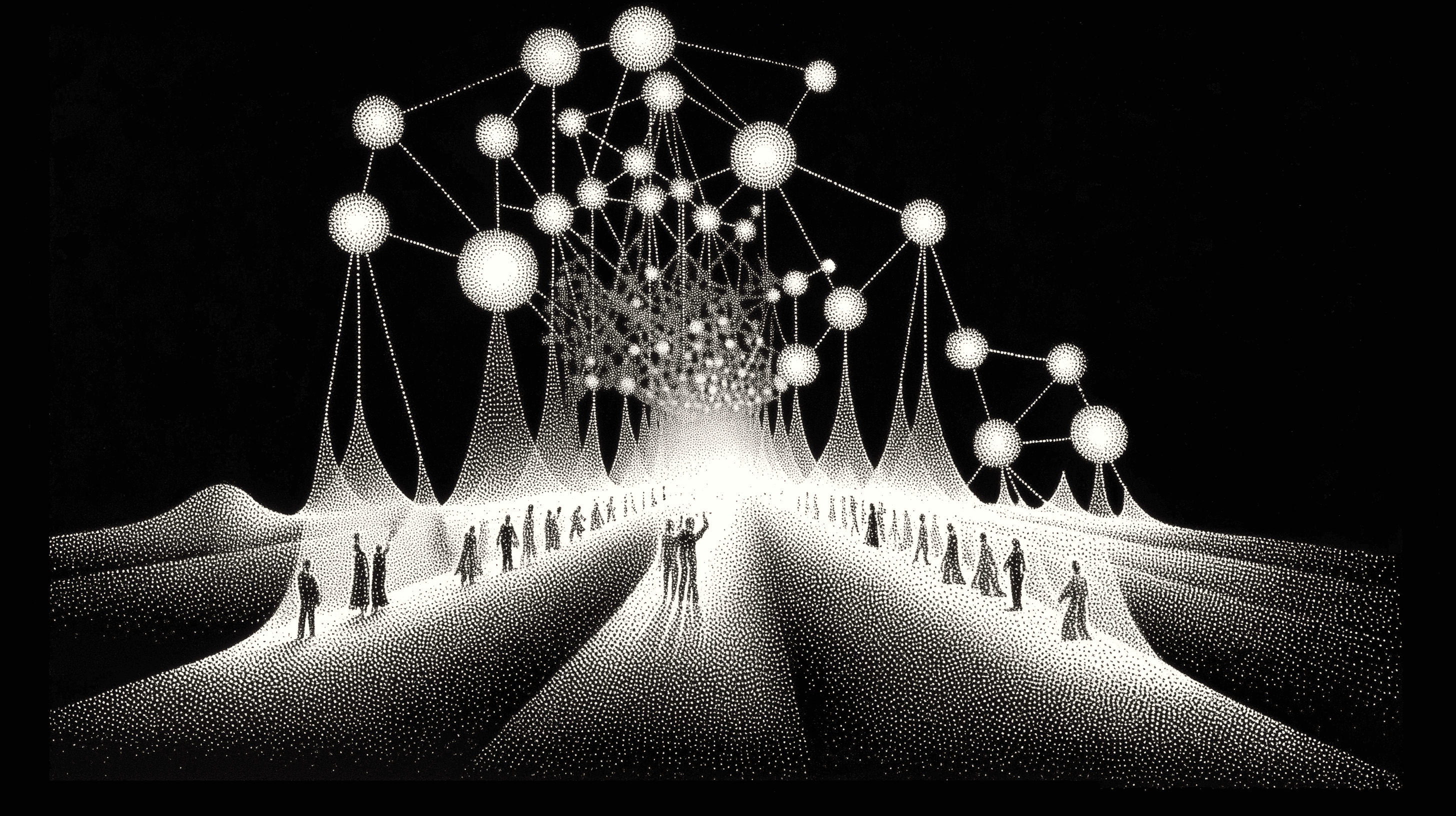

In a world increasingly driven by acceleration, where machine learning predicts behaviors and algorithms shape what we see, hear, and value – communities still resist the fast lane.

Why?

Because communities, unlike networks or audiences, are not transactional. They are deeply relational, slow-growing organisms – woven together through shared purpose, mutual recognition, and collective, compounding trust.

And while AI offers novel tools to help shape, scale, and support these ecosystems, its deployment must be approached with reverence for the human texture at the heart of every thriving community.

At ATÖLYE, where we design for transformation through the power of communities, we have come to understand this tension intimately. Our own Community-Powered Transformation model, rooted in our Playbook, is built on three cornerstones: curation, engagement, and activation. Each of these stages presents a space where AI can either be a force multiplier or a subtle threat to integrity. Alongside these stages, we also added two call-outs, one on Community Management and another on Trust, both of which are essential ingredients for success.

Let us explore each, with eyes open to both promise and peril.

1. Curate with Intelligence, But Don’t Lose the Soul

Community-building starts with curation: the delicate act of gathering people who are not only aligned by interest, but attuned by values.

AI excels at pattern recognition – analyzing portfolios, social signals, and behavioral data to understand the nuances of engagement and belonging. Research from the MIT Center for Collective Intelligence demonstrates that systems like Supermind Ideator – where generative AI supports collaborative teams – can enhance creative problem-solving and sensemaking, and, in related projects, improve the efficiency of matching expertise and opportunities in complex networks (MIT CCI, 2024).

This points to tremendous potential for any software or tool that sits on such data to conduct sensemaking and generate recommendations at scale – whether for longlisting applications, shortlisting candidates, streamlining interviews, or refining vetting processes. And given that curation is the key ingredient for any community, the prospect ahead is an exciting one.

To this end, platforms like Neol, our sibling company focusing on AI-enabled talent matchmaking, use machine intelligence to match experts with challenges, representing the frontier of AI-powered talent curation. As Neol’s model shows, AI can elevate discovery and streamline decision-making, surfacing people we might otherwise overlook.

While technology offers an exciting prospect, it is important to acknowledge the tension that emerges here. Echoing concerns from Harvard’s Berkman Klein Center’s Ethics and Governance of AI Initiative, data often acts as a mirror of the past – reflecting existing patterns and gaps in representation. Their research warns that without deliberate design for diversity and inclusion, critical human elements like sentiment, emergent synergy, and latent potential can fall through the cracks (Berkman Klein Center, 2024).

Not everything that matters can be captured in data – whether it’s unspoken commonalities, subtle connections, or the spark of new ideas.

AI may be adept at showing who is similar or different, but it still takes human insight and a discerning eye to recognize potential, invite the unusual suspect, and notice what is only just beginning to emerge.

Curation, at its best, is about designing for resonance – not just relevance.

2. Engage with Frequency, But Lead with Empathy

Once people are gathered, how do we build energy, cohesion, and momentum?

AI can help in this front. Matching members by shared interests. Suggesting conversation topics. Generating personalized prompts to nudge participation. From Slack plugins to community OS platforms like Circle.so or Mighty Networks, tools now abound for automating the “hello” – and surfacing the right people at the right moment.

And yet, a word of caution. As Business Insider notes in its recent investigation, people increasingly sense when they’re talking to a bot – and often recoil. In fact, the use of AI to simulate human conversation in group chats is already “creating loneliness masked as connection” (Business Insider, 2025).

At ATÖLYE, we have found that while AI can initiate interaction, it is the human follow-up – the nuance, the listening, the memory of past exchanges – that builds deep relationships. This is what we call relational trust, and it cannot be faked or fast-tracked.

3. Activate with Insight, Not Just Incentives

Every community has untapped potential – ideas unspoken, skills dormant, collaborations waiting to happen.

Here, AI can serve as a catalyst. Systems that map influence networks, track engagement patterns, and send timely nudges can help activate silent members and surface collective intelligence. Just as fitness apps prompt us to move, community systems can prompt us to contribute.

Carnegie Mellon’s recent research suggests that when AI is combined with real-time, human-sensitive feedback, it not only improves performance, it also builds trust (Science Daily, 2025). The key is thoughtful orchestration. Nudges work best when rooted in shared purpose, not just gamified incentives.

At ATÖLYE, we design activation moments – sprints, rituals, provocations – that spark action without forcing it. We believe AI can scaffold participation. But it cannot compel commitment.

4. Support the Stewards: AI as Infrastructure for the Invisible Work

Community managers are the unsung heroes of this ecosystem. Their work is often invisible, emotional, and deeply intuitive – and, as research from the CMX Community Industry Report notes, frequently at risk of burnout due to the high emotional load and constant context-switching (CMX, 2024). It’s also exhausting.

AI can ease their burden. From drafting onboarding flows to summarizing long threads to predicting churn, machine intelligence can reduce operational load and enhance pattern detection. Studies from Stanford HAI and Harvard Business Review show that natural language processing tools can automate up to 30–40% of routine communication tasks without eroding the perceived quality of member engagement (Stanford HAI, 2023; HBR, 2022). It can help community stewards spend less time managing tools – and more time holding space.

But AI cannot replace the art of sensing the room.

As MIT Sloan Management Review research on “empathic leadership” highlights, qualities like emotional attunement, trust‑building, and moral reasoning remain uniquely human capabilities – ones that no current machine learning model can replicate at a relational depth (MIT SMR, 2023). It cannot perceive subtle shifts in tone, tension, or trust. It cannot craft an apology with humility. For all its brilliance, it lacks moral imagination.

So let AI handle the repeatable. Let humans hold the irreducible.

5. Beware the Speed Trap: Why Trust Doesn’t Scale Like Code

Here lies the paradox: AI is fast. Trust is slow.

As KPMG’s 2025 global study on AI trust signals, while 72% of executives are adopting AI at scale, a majority of users still express concerns around authenticity, data privacy, and social manipulation (KPMG, 2025).

And in the community space, those concerns are amplified. A recent AI experiment on Reddit, where bots were used to subtly persuade users, backfired catastrophically. The result? Backlash, distrust, and erosion of the platform’s core advantage: human dialogue (2025).

This should give us pause. Because once a community loses its sense of safety and authenticity, recovery is nearly impossible. Dilution is a one-way road.

As we like to say: “Trust takes years to build, seconds to break, and forever to repair.”

What Now? A Compass for Human-AI Co-Creation

If we want to leverage AI while preserving community integrity, we must:

- Curate with intention: Let AI surface options; let humans select with wisdom.

- Engage with nuance: Let AI prompt; let humans respond.

- Activate with care: Let AI spark; let humans sustain.

- Support the stewards: Let AI streamline; let humans guide.

- Protect the sacred: Let AI be fast; let humans be true.

Your Invitation

We’re evolving our Community Playbook with a brand-new chapter exploring the intersection of AI and communities – how emerging tools can amplify human connection, collective intelligence, and shared purpose.

This is very much a work in progress, and we’d love to shape it together. Your insights, provocations, and lived experiences will help us ensure this chapter reflects not just what’s possible, but what’s meaningful.

💡 Share your thoughts – what’s top of mind for you when it comes to AI in community building?

📬 Reach out if you’d like to co-create this chapter with us.

Because when humans and machines learn from one another in service of community, extraordinary things can happen.

References:

- MIT Center for Collective Intelligence (2024) – Generative AI and Collective Intelligence. Read here ›

- Berkman Klein Center for Internet & Society (2024) – Ethics and Governance of AI Initiative. Read here ›

- Stanford HAI (2024) – Covert Racism in AI: How Language Models Are Reinforcing Outdated Stereotypes. Read here ›

- CMX Hub (2024) – 2024 CMX Community Industry Report. Read here ›

- Stanford HAI (2023) – Generative AI and the Future of Work: Industry Brief. Read here ›

- MIT Sloan Management Review (2025) – These Human Capabilities Complement AI’s Shortcomings. Read here ›

- Business Insider (2025) – The Rise of ChatGPT Group Chats: How Generative AI is Changing Friendship, Marriage, and Loneliness. Read here ›

- ScienceDaily (2025) – AI Can Help Build Better Human Teams by Predicting Group Success. Read here ›

- KPMG (2025) – Trust, Attitudes and Use of AI: Global Report. Read here ›

- News.com.au (2025) – University’s AI Experiment Reveals Shocking Truth About Future of Online Discourse. Read here ›